Walk into a CISO’s office today, and the conversation isn’t just about firewalls, zero trust, or endpoint detection anymore. It’s about something fuzzier, newer, and more dangerous: agentic AI. Unlike traditional models that respond to prompts, agentic AI systems can act, plan, and execute tasks without waiting for constant human input. They’re not tools in the old sense. They’re actors in a digital ecosystem.

That shift opens doors for productivity, but it also opens a Pandora’s box of cyber risks. A Salesforce admin bot that books calendar entries might, with one wrong prompt injection, start scraping confidential files. A DevOps assistant could accidentally spin up shadow services, bypassing logging. An autonomous fraud detection agent could itself be hijacked to commit fraud.

This is why security professionals are calling agentic AI threats the black box of tomorrow’s cybercrime. The power is real, but so are the risks.

This guide walks through what agentic AI means, real-world threat examples, how frameworks like OWASP’s Agentic AI Threats and Mitigations help, and what enterprises can do to prepare.

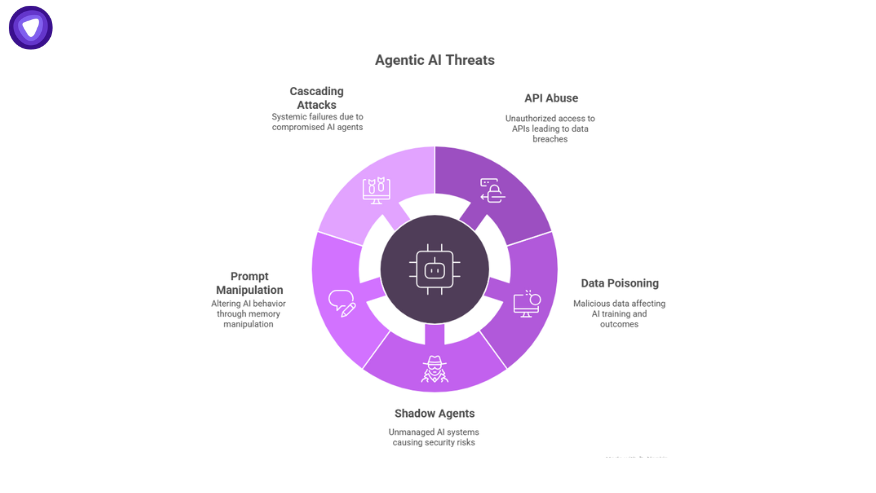

- Agentic AI: Autonomous systems that act without constant human input, creating new attack surfaces.

- Key Risks: API abuse, data poisoning, shadow agents, memory manipulation, and cascading failures.

- Cyber Threats: Agents exploited to exfiltrate data, move laterally, and persist in IT systems.

- Major Effects: Faster attacks, harder attribution, compliance gaps, and operational instability.

- Mitigations: OWASP frameworks, sandboxing, audit logs, kill switches, and agent identity lifecycle controls.

- Enterprise Action: Map agents, apply Zero Trust, red-team autonomous abuse, and train staff on AI-driven threats.

- PureVPN White Label: Encrypts agent traffic, closes data-in-transit gaps, and helps resellers bundle compliance-ready solutions.

What Is Agentic AI?

Agentic AI describes artificial intelligence systems with autonomy: they don’t just generate answers, they execute tasks, make decisions, and chain together actions toward goals.

Where a traditional LLM might answer, “Here’s how to draft an email,” an agentic AI system will write the email, attach files, schedule the meeting, and push it into your CRM.

That autonomy has huge benefits for enterprises:

- Automating repetitive IT operations.

- Managing customer support with fewer agents.

- Handling DevOps pipelines and cloud infrastructure.

- Running proactive threat detection at scale.

But autonomy changes the security equation. An AI that acts is an AI that can act wrong or act maliciously if hijacked.

What Are the Risks of Using Agentic AI?

The risks of agentic AI include identity spoofing, data poisoning, API abuse, shadow agent creation, memory manipulation, and cascading multi-agent failures.

Why? Because once you let an agent operate autonomously, you’re giving it access to systems and data. If that access is compromised, you’ve multiplied the impact.

Agentic AI Threats Examples

Let’s ground this with concrete cases.

1. API Abuse and Credential Replay

Agents often interact with APIs directly. If strong identity and session controls don’t protect those APIs, attackers can steal agent credentials or replay API calls. Imagine an autonomous finance bot pulling invoices hijacked, it could flood systems with fake invoices that look legitimate.

2. Data Poisoning at Scale

Traditional poisoning affects one model. With agents, poisoning can ripple across ecosystems. A malicious dataset could train a customer-support agent to subtly misclassify tickets, letting fraud slip through.

3. Unmonitored Shadow Agents

Enterprises already battle shadow IT. Now add shadow agents: autonomous systems spun up by developers or departments, untracked and unmanaged. These can leak sensitive data or break compliance controls without anyone knowing.

4. Prompt and Memory Manipulation

Long-running agents keep memory. Attackers can slip poisoned prompts into those memory chains, guiding agents to expose secrets or change behaviors days later.

5. Cascading Attacks in Multi-Agent Systems

In setups where agents collaborate, a single compromised agent can cascade failure across the network. One rogue assistant feeding bad outputs into a supply chain of other agents can lead to systemic collapse.

These are no longer theoretical. Security researchers and red teams have already demonstrated agentic AI threats examples that chain tasks together to exfiltrate data, bypass MFA, or pivot laterally inside corporate networks.

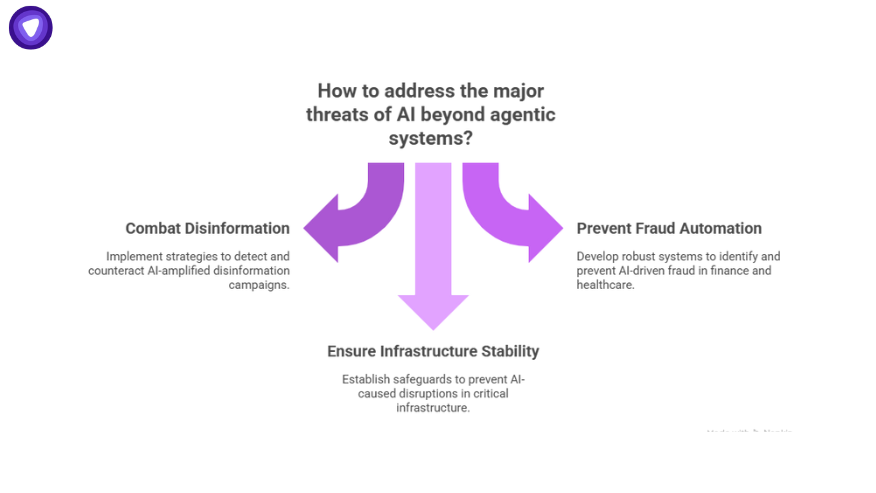

What Are the Major Threats of AI Beyond Agentic Systems?

While agentic AI magnifies risks, broader AI adoption carries threats too:

- Disinformation campaigns amplified by generative models.

- Fraud automation in finance and healthcare.

- Infrastructure disruption when AI makes decisions in critical systems.

The point is this: traditional AI threats don’t go away with agentic systems. They get faster, more automated, and harder to detect.

What Is Agentic AI Cyber Threats?

Agentic AI cyber threats are malicious uses of autonomous AI agents to compromise, exploit, or persist in IT environments.

Where classic cyberattacks needed humans to script and push, agentic threats are semi-independent. Attackers can set a malicious agent loose and let it work 24/7.

Examples:

- An agent designed to scrape LinkedIn for sales leads repurposed to scrape for password reset emails.

- A DevOps deployment agent manipulated to open unauthorized ports.

- Attackers chaining compromised Salesloft drift-style integrations to move laterally.

This is why experts like those at CyberArk, Akamai, and Guardicore AI are warning: agentic AI is not just a productivity tool. It’s a new attack surface.

The Effects of Agentic AI

- Faster attack cycles. Agents don’t sleep or wait.

- Blurred attribution. Was it a user, a rogue agent, or an attacker pulling strings?

- Compliance risk. GDPR, HIPAA, ISO 27001 all assume humans are accountable. Agents make that messy.

- Trust erosion. Customers may hesitate to interact with systems where autonomous agents act unpredictably.

For businesses, these effects aren’t abstract. They hit contracts, audits, and customer trust directly.

Frameworks and Emerging Mitigations

The good news: security communities are building frameworks.

- Agentic AI Threats and Mitigations OWASP: a working project identifying vulnerabilities unique to agentic AI. It maps issues like memory manipulation and shadow agents into a taxonomy and suggests mitigations.

- Key recommendations:

- Treat agents as identities with privileges.

- Limit agent scopes to least privilege.

- Sandbox agents and log every action.

- Build lifecycle controls for onboarding and offboarding.

- Treat agents as identities with privileges.

This is a start, but it’s clear most enterprises don’t yet have operationalized policies for autonomous systems.

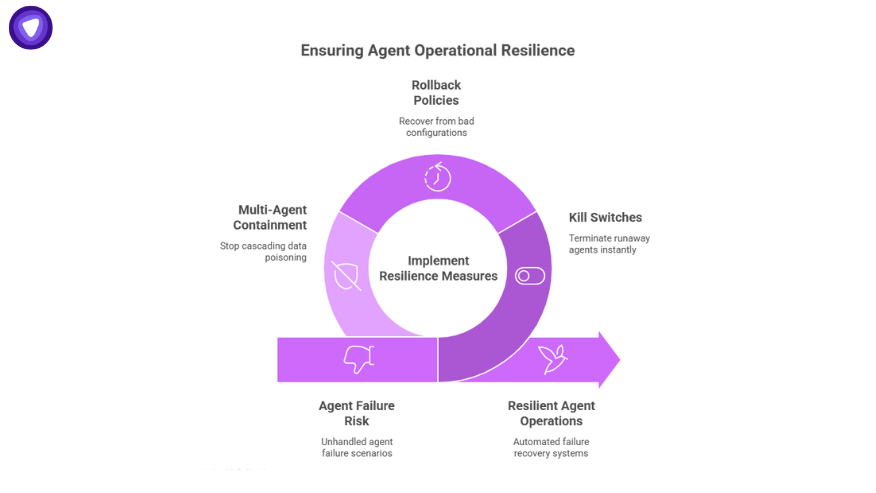

Operational Resilience – Preparing for Agent Failure

One of the most overlooked areas: what happens when agents fail?

- Kill Switches: Organizations need the ability to terminate runaway agents instantly.

- Rollback Policies: If an agent deploys a bad config, systems must recover automatically.

- Multi-Agent Containment: Detecting when one agent’s bad data is poisoning others, and stopping the cascade.

These aren’t common yet in enterprise playbooks. But they need to be.

Compliance and Governance Risks

Here’s where regulators step in.

- Non-human identities: Treat agents like employees. Give them onboarding, monitoring, and offboarding lifecycles.

- Audit logging: Every agent action needs to be traceable for compliance frameworks.

- Role-based controls: Agents shouldn’t run with root or global admin privileges by default.

- Privacy considerations: If agents process PII, GDPR and HIPAA rules apply just as if a human did.

Without governance, agentic AI could push enterprises into compliance non-compliance faster than traditional IT mistakes.

Encrypting Agentic AI Traffic with PureVPN White Label

Here’s one gap most organizations don’t cover: securing traffic once agents send or receive data. Policies lock down devices. Sandboxes protect runtime. But data in transit is often left exposed.

That’s where VPN encryption comes in.

With PureVPN White Label, resellers and MSPs can:

- Offer per-app or full-device VPN that integrates with EMM and IAM policies.

- Ensure agent-driven traffic is encrypted end to end.

- Help clients meet HIPAA, ISO 27001, and GDPR requirements.

- Add recurring revenue streams by bundling VPN into compliance-grade packages.

Conclusion

Agentic AI isn’t science fiction. It’s in enterprise software today, quietly running workflows and handling sensitive data. But the autonomy that makes it powerful also makes it unpredictable.

The lesson is clear:

- Agents expand your attack surface.

- They demand governance, lifecycle management, and technical safeguards.

- Without those, attackers will exploit them as the next big cybercrime tool.

Enterprises that prepare now, with governance frameworks, defense-in-depth, and encrypted transit via VPNs can turn agentic AI from a black box of risk into a competitive advantage.