Web scraping is one of the most exciting ways to collect data from the internet. Whether you’re tracking product prices, analyzing competitor websites, or gathering research data, scraping helps you transform raw HTML into structured, usable insights.

Getting started is much easier than most people think. In this guide, you’ll build your first web scraper in 5 minutes or less, learn the basics of how scraping works, and discover how to scale your scraping projects without getting blocked.

What is Web Scraping?

In simple terms, web scraping is the process of extracting information from websites using automated scripts or tools. Instead of manually copying and pasting, scrapers let you pull hundreds (or even thousands) of pages in minutes.

What Tools You’ll Need (Before You Start) Web Scraping?

For this 5-minute scraper, you’ll only need:

- Python 3 installed on your computer

- A package manager like pip

- The libraries requests and BeautifulSoup (for fetching and parsing HTML)

To install them, run:

pip install requests beautifulsoup4

If you’re new to Python, these two names might look intimidating. Don’t worry, they’re just helper tools that make scraping easy:

- Requests → It is like a browser inside Python. It fetches the webpage’s HTML so your code can read it.

- BeautifulSoup → Acts like a filter. It helps you sift through messy HTML and pick out the exact text, links, or tags you need.

That’s it. You’re ready.

How to Build Your First Web Scraper Step by Step

Even if you’ve never coded before, this guide will walk you through building a simple web scraper.

Step 1: Install Python (If You Don’t Have It)

- Go to python.org/downloads.

- Download the latest version and install it.

- To check if it’s installed, open your terminal/command prompt and type: python –version

Step 2: Install the Libraries You Need

Open your terminal/command prompt and type:

pip install requests beautifulsoup4

- requests → visits websites.

- BeautifulSoup → digs through the page and finds what you want.

Step 3: Create Your Scraper File

- Open a text editor (Notepad works, but VS Code is better).

- Create a new file and save it as scraper.py.

Step 4: Add This Code

Copy-paste this code into your file:

# Import tools

import requests

from bs4 import BeautifulSoup

# Website we want to scrape

URL = "http://quotes.toscrape.com"

# Visit the website

response = requests.get(URL)

# Organize the HTML

soup = BeautifulSoup(response.text, "html.parser")

# Find all quotes on the page

quotes = soup.find_all("span", class_="text")

# Print them out

for i, quote in enumerate(quotes, 1):

print(f"{i}. {quote.get_text()}")Step 5: Run the Scraper

In your terminal/command prompt, go to the folder where you saved scraper.py and

Type: python scraper.py

Step 6: See the Magic

Your computer will print out quotes like this, instead of pure HTML code.

1. “The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.”

2. “It is our choices, Harry, that show what we truly are, far more than our abilities.”

3. “There are only two ways to live your life. One is as though nothing is a miracle. The other is as though everything is a miracle.”

And just like that, you’ve built your first web scraper!

Why Does Web Scraping Become a Challenge?

Your first scraper usually works great when you’re scraping a friendly test site. But once you try scraping big or real-world sites, like Amazon, Google, LinkedIn, things often go sideways. Here are the most common issues newcomers face.

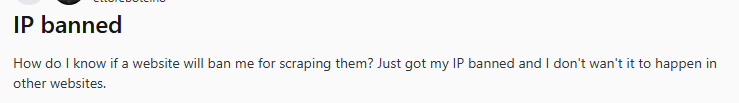

IP Blocks / Bans

You might get 403 Forbidden, 429 Too Many Requests, or simply “Access Denied.” People report that after a bunch of requests from the same IP, their access gets shut off.

CAPTCHAs & Bot-Detection

Sites ask you to prove you’re human. Sometimes they trigger CAPTCHA automatically when they see many requests. Amazon is notorious for this: heavy bot detection, interactive challenges. When your scraper reaches that point, it can’t proceed without solving the CAPTCHA. Some tools exist to solve this, but they add complexity.

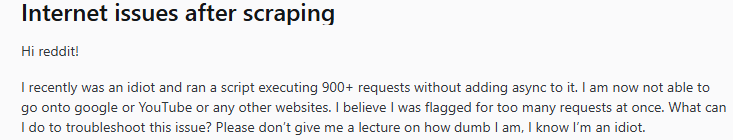

Rate Limiting

If your scraper sends too many requests per second or minute, servers slow down your access or temporarily block your IP. A Reddit user recalls running ~900+ requests too fast and then losing access.

Regional Limits

Some content is only visible if your IP is from certain countries. Other times, content or prices differ by region. If you try scraping from a non-supported region, you see different data or no data at all. Proxies with a geographic diversity area solution.

Changing Layouts / Dynamic Content

HTML structure changes frequently. What worked yesterday fails today. Some content is loaded dynamically via JavaScript (AJAX, infinite scroll), requests + example alone won’t see it because it’s not present in the initial HTML. Sometimes, scripts break as well.

This is the exact moment where beginners realize that web scraping isn’t just about writing code. It’s about staying undetected, mimicking human behavior, adapting to site defenses, and using smart tools like good proxies, rotation, etc.. Without solutions for these challenges, your scraper may be fragile, unreliable, or blocked entirely.

Why Proxies Are Essential for Scraping?

A scraper without proxies is like trying to sneak into a concert with the same fake ID every time. You’ll get caught fast. Proxies mask your IP address by routing requests through different servers. The two main types are:

- Datacenter proxies: Fast but easily detected (sites know they’re not real users).

- Residential proxies: Use real ISP-assigned IPs, making them much harder to block.

So which one do you think is reliable? You guessed it right!

Why PureVPN’s Residential Proxies Are a Game-Changer for Web Scraping

Scraping small demo sites is easy, but once you target giants, things get messy fast. IP bans, CAPTCHA, and geo-blocks restrict you. This is where PureVPN’s Residential Proxies become your advantage.

- Access 90,000+ Residential IPs, spread across real devices worldwide.

- Automatic IP Rotation makes every request come from a fresh IP, reducing block risks.

- Geo-targeting scrapes region-specific data (like prices in the US vs the UK).

- Built on VPN-grade security keeps you encrypted, private, and safe.

How to Set Up a Residential Proxy for Web Scraping

Getting started with PureVPN’s Residential Proxies is quick and straightforward. Just follow these steps:

- Sign Up for PureVPN

Head over to the PureVPN website and create your account. Be sure to select the Residential Network add-on with your plan.

- Download and Install the App

Once subscribed, download the PureVPN app on your preferred device, whether it’s Windows, macOS, iOS, Android, or even your browser.

- Log In to Your Account

Open the app and sign in using your PureVPN credentials to access all your premium features, including residential proxy support.

- Connect to a Residential IP

From the server list, choose the Residential Network option. You can select from US or UK residential IPs to make your traffic appear authentic and location-specific.

- Start Web Scraping Safely

With your scraper now running through a real residential IP, you can bypass blocks, access limited data, and collect information seamlessly, just like a regular user.

Frequently Asked Questions

It depends. Scraping public data is usually fine, but scraping behind logins or copyrighted content can be illegal. You must always check site policies.

Websites block scrapers mainly to protect their infrastructure, prevent abuse, and safeguard sensitive or competitive data. Automated requests can overload servers, skew analytics, or even give competitors an unfair advantage by harvesting large volumes of information. That’s why platforms like Amazon, LinkedIn, and Google deploy advanced anti-bot systems to detect and block scraping attempts.

Technically, yes, if you’re working on small, non-critical projects like scraping a personal blog or testing your first script, you might get by without proxies. But for any serious web scraping, when targeting high-traffic sites or large datasets, proxies are a must to stay undetected, avoid CAPTCHAs, bypass regional limits, and reduce the chances of your scraper getting banned after just a few requests.

Residential proxies are tied to real devices (like laptops, routers, and mobile phones) and issued by legitimate ISPs, which makes your traffic look like it’s coming from a real user. This makes them much harder to detect and block compared to datacenter proxies, which are hosted on servers and often flagged quickly.

Final Thoughts

Building your first scraper is exciting; you’ve just automated your first data pipeline in minutes. But scraping at scale means dealing with blocks, CAPTCHA, and regional limits.

That’s why PureVPN’s Residential Proxies are the perfect partner for anyone who wants to go from beginner to pro scraper, by keeping you anonymous, bypassing restrictions, and gathering the data you need, fast, reliably, and securely.