The global conversation around minors’ online safety has moved decisively from whether age verification is necessary to how it should be implemented. Signals from lawmakers and regulators suggest that 2026 may represent a turning point, as age verification shifts from a best-practice recommendation to a legal requirement across multiple jurisdictions.

Age Assurance Is No Longer Optional

Over the past year, governments have increasingly rejected self-declared ages as insufficient. In the U.S., several states have passed or attempted to enforce age-related assurance laws targeting social media platforms, age-appropriate design, and even app stores themselves. While many of these laws face constitutional challenges, regulators appear undeterred. If even portions survive judicial scrutiny, copycat legislation is likely to spread rapidly.

Your email could be compromised.

Scan it on the dark web for free – no signup required.

At the federal level, momentum is building. Congressional committees have already advanced multiple minors’ privacy and online safety bills, and the Federal Trade Commission has signaled increased scrutiny of age assurance technologies, including through planned workshops and public consultations. This indicates a shift from theoretical debate to implementation-level guidance.

Europe Is Moving Toward Harmonization

In Europe, the approach is more structured but equally firm. Under the Digital Services Act (DSA), platforms are expected to assess risks to minors and adopt proportionate safeguards—with age verification emerging as one of several prominent mitigation tools rather than a universal mandate. The European Commission has also signaled interest in a harmonized age-verification standard, reducing fragmentation across member states.

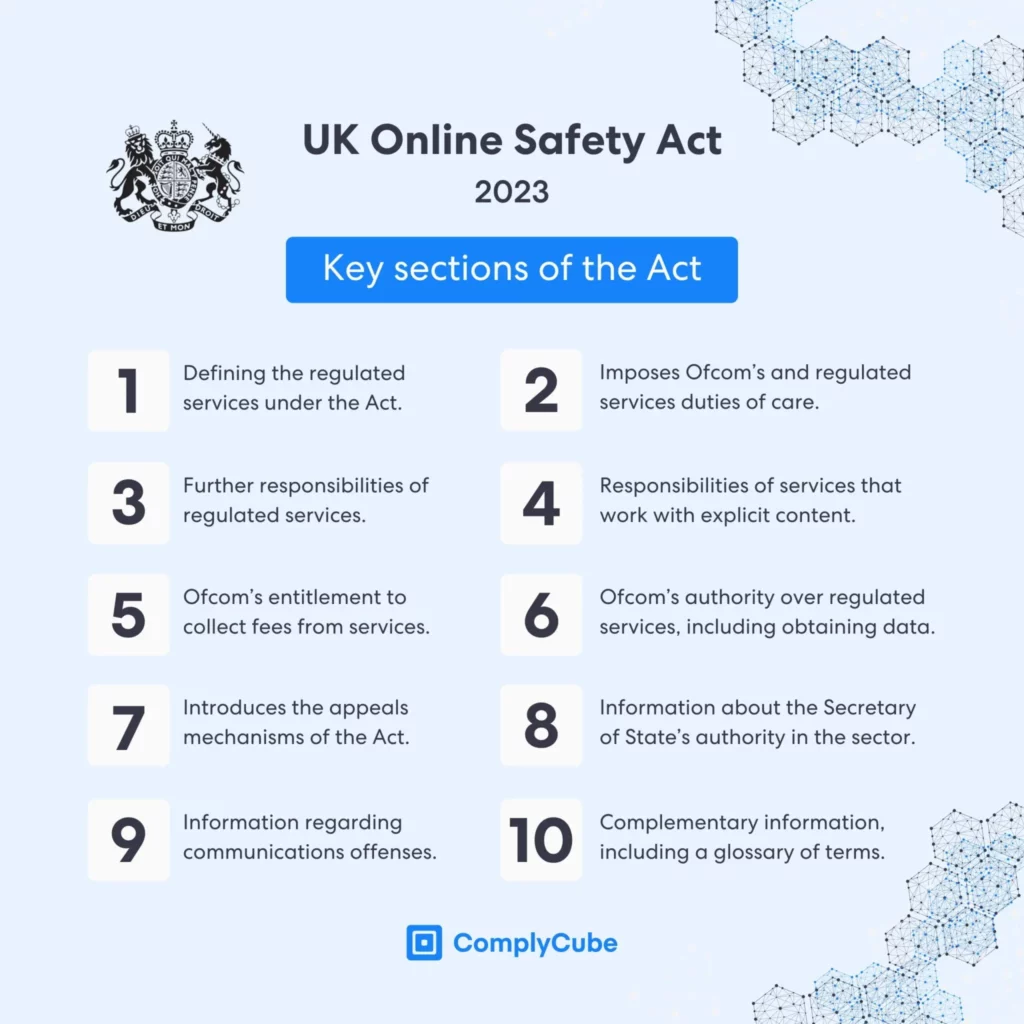

Meanwhile, the UK’s Online Safety Act has introduced clearer expectations for when and why age assurance must be used. Early enforcement focused on adult content sites, but regulators have already indicated that broader platform categories are expected to come under increased scrutiny starting in 2026, including social media, gaming, and content-sharing services.

App Stores Are Entering the Compliance Chain

A notable development is the expanding role of app stores. Jurisdictions such as certain U.S. states and Singapore are exploring regulatory approaches that would push responsibility upstream, requiring app marketplaces to provide age signals or even perform age checks themselves. This marks a structural shift: platforms may no longer control the entire age-verification process but will still be accountable for how minors experience their services.

Source: ComplyCube

AI, Adult Content, and Risk-Based Enforcement

Age verification is no longer limited to traditional social platforms. Laws are now extending to AI tools capable of generating adult or harmful content, as well as services hosting user-generated material. Regulators are increasingly applying a risk-based lens, asking whether a product is likely to be accessed by minors rather than whether it is explicitly marketed to them.

As a result, platforms offering generative AI, recommendation algorithms, or immersive experiences should expect age assurance obligations even if minors are not their primary audience.

What Platforms Should Prepare for in 2026

By 2026, companies should expect:

- Stricter regulatory enforcement: As age assurance frameworks mature, regulators are likely to move beyond warnings and voluntary guidance, placing greater emphasis on demonstrable compliance and measurable outcomes.

- Real-world verification effectiveness: Authorities are expected to assess whether age verification systems actually prevent minors from accessing restricted content, rather than relying on policy documentation alone.

- Greater scrutiny of age data: Platforms will face closer examination of how age-related data is collected, stored, minimized, and protected, particularly where sensitive identity information is involved.

- Increased scrutiny of child safety and privacy: Companies will need to demonstrate that age assurance measures are proportionate, privacy-respecting, and do not unnecessarily restrict lawful users or freedom of expression.

The debate is no longer about if age verification will be required, but about how defensible and privacy-respecting a platform’s approach is.

Where Privacy Tools Fit In

As age verification expands, platforms and users alike are becoming more conscious of how identity and age data is transmitted and protected online. With verification processes introducing new data-handling risks, tools like PureVPN can play a supporting role by helping users secure their connections, reduce data exposure, and reduce the risk of interception during verification processes, particularly on public or unsecured networks.

While VPNs do not replace age verification systems, they can complement broader privacy and security practices as digital identity checks become more common.

Online Safety Act Faces Heat Over Free Speech, Tech Burdens, and Site Bans

A petition to repeal the Online Safety Act has drawn more than 330,000 signatures, with critics arguing that the law’s scope unfairly targets niche communities, imposes onerous technical burdens on smaller platforms, and limits free expression online.

Several adult and Rule 34 sites have already blocked UK traffic entirely, saying compliance is too costly and intrusive, even when content is fictional or niche in nature.